Almost every industry uses real-time data analytics solutions provided by data science. This is especially true in e-commerce, sports betting, trading, custom marketing, and politics, which requires real-time updates on election results.

Analytics solutions are designed to minimize the time spent making decisions. They have to be capable of collecting and promptly processing lots of data as real time provides many users with timely insights and analytics. Existing software solutions rarely offer exhaustive data in an easy-to-view format along with a personalized approach. But they really should!

Why should analytics software offer exhaustive data and a personalized approach?

Take Netflix, for example. Netflix collects information on customers’ searches, ratings, watch history, etc. As a data-driven company, Netflix uses machine learning algorithms and A/B tests to drive real-time content recommendations for its customers.

This approach enables Netflix to provide customers with personalized suggestions and content similar to what they’ve already watched, or to advise titles from a specific genre. Thanks to such personalization, Netflix saves $1 billion a year in value from customer retention.

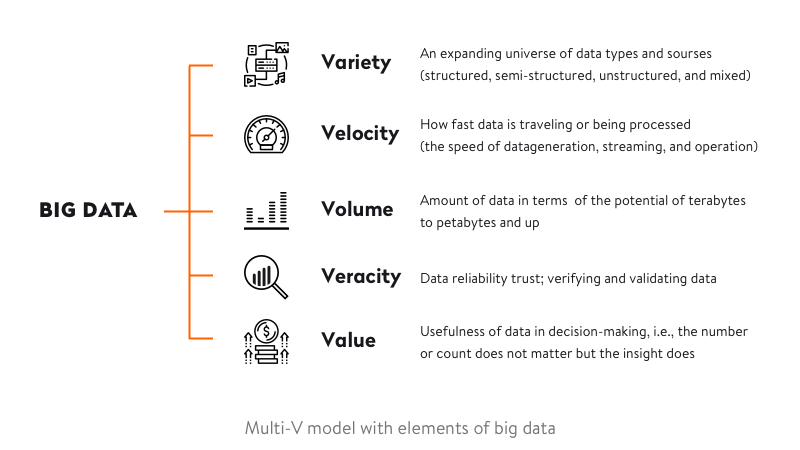

If your idea is to build a comprehensive but simple tool capable of speeding up data processing, this introduction to real-time big data management will give you a competitive advantage. Note that the tool you’ll create will have to deal with big data. To begin this complete guide to real-time big data processing, let’s define what exactly big data is.

Read also: How to Pick Your Niche in Video Streaming App Development

Big data: a big deal for making optimal decisions

Big data refers to data sets so large that traditional data processing software can’t handle them. Tools like Apache Storm and Apache Spark help businesses perform big data processing.

But extracting value from big data isn’t just about analyzing the data, which is a whole other challenge. It’s a comprehensive process that requires analysts and executives to find patterns, make logical assumptions, and predict the course of events.

To simplify this process, you can use big data analytics to reveal information regarding your processes, customers, market, etc. Real-time big data analytics involves pushing data to analytics software immediately when it arrives. Let’s view the whole path data goes along to help you and your customers make data-driven decisions.

Real-time big data analytics

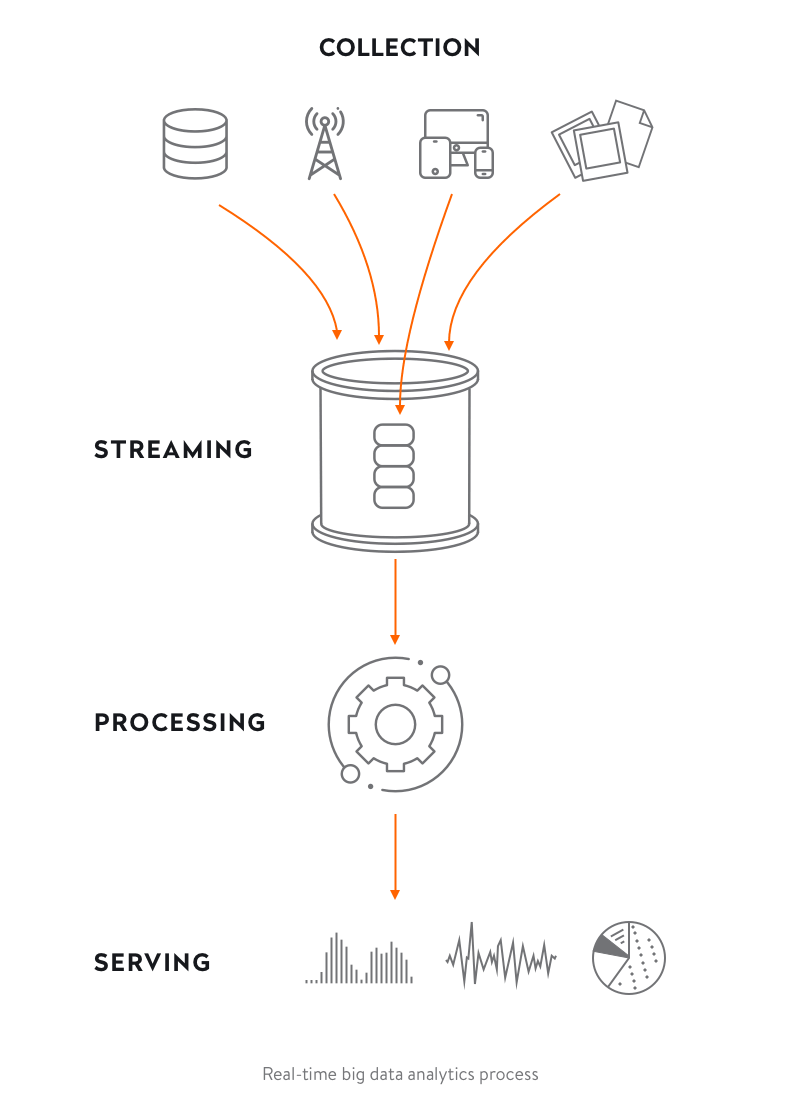

Analyzing big data in real time entails collecting, streaming, processing, and serving data to identify patterns or trends. This process is presented in the image below.

Data collection

Depending on what the data will be used for, the data collection process may look different from one organization to another.

Where might data be taken from?

If your purpose is to help users make decisions based on highly specialized data, you’ll need to implement third-party integrations with data sources. If you want to analyze users to offer them personalized service, you’ll need to collect data on users and their behavior. Either way, your purpose is to optimize customer service processes.

Third-party integrations. To get data from APIs, we suggest using a crawler, scheduler, and event sourcing. A crawler uses a scheduler to periodically request data from third-party APIs and records this data in a database. Event sourcing helps to atomically update data and publish events. Event sourcing applies an event-centric approach to persistence: a business object is persisted by storing a sequence of state-changing events. If an object’s state changes, a new event is added to the sequence.

Customer data. You can collect customer data by asking users for it directly (for instance, when they sign up for a subscription). Technologies such as cookies and web beacons on your website can help you in monitoring visitors’ browsing histories. Social media, email, and company records on customers are also great sources to pull data from.

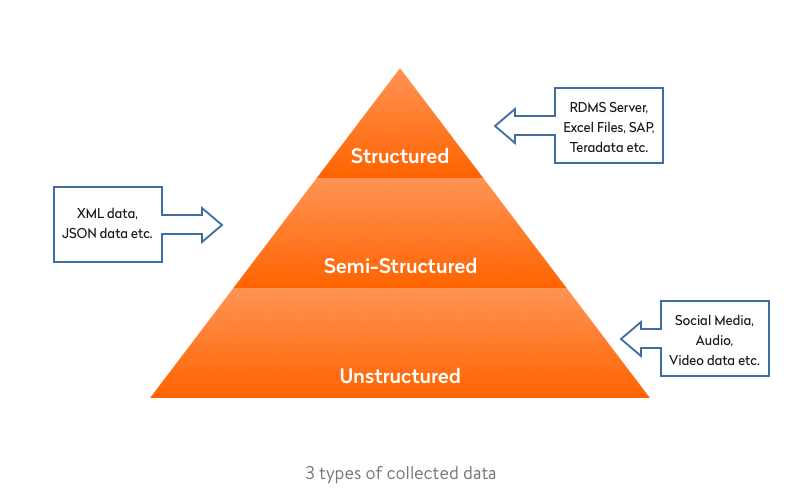

Three types of data that are collected

There are three types of data that are usually collected from cloud storage, servers, operating systems, embedded sensors, mobile apps, and other sources.

Structured data. This data is linear and is stored in a relational database (such as a spreadsheet). Structured data is much easier for big data tools to work with than are the other two types. Unfortunately, structured data accounts for just a small percentage of modern data.

Semi-structured data. This type of data provides some tagging attributes. But it isn’t easily understood by machines. XML files and email messages are examples of semi-structured data.

Unstructured data. Nowadays, most data is unstructured. It’s mostly produced by people in the form of text messages, social media posts, video and audio recordings, etc. As this data is diverse (and partially random), it takes big data tools more time and effort to make sense of it.

You might need to store past data to enable a user to compare it with real-time events. For example, a sports betting app might help users make betting decisions based on old data by viewing analytics in a given year. A user might also be able to compare a specific type of data in the current period.

That’s why a database has to be capable of storing and passing data to a user with minimal latency. Databases have to be able to store hundreds of terabytes of data, manage billions of requests a day, and provide near 100 percent uptime. NoSQL databases like MongoDB are typically used to tackle these challenges.

The data you collect then needs to be processed and sorted. Let’s discuss how this should be done.

Read also: Choosing the Right Database

Real-time data streaming and processing

There are two techniques used for streaming big data: batch and stream. The first is a collection of grouped data points for a specific time period. The second handles an ongoing flow of data and helps to turn big data into fast data.

Batch processing requires all data to be loaded to storage (a database or file system) for processing. This approach is applicable if real-time analytics isn’t necessary. Batching big data is great when it’s more important to process large volumes of data than to obtain prompt analytics results. However, batch processing isn’t a must for working with big data, as stream processing also deals with large amounts of data.

Unlike batch processing, stream processing processes data in motion and delivers analytics results fast. If real-time analytics are critical to your company’s success, stream processing is the way to go. This methodology ensures only the slightest delay between the time data is collected and processed. Such an approach will enable your business to be transformed fast if needed.

There are open-source tools you can use to ensure real-time processing of big data. Here are some of the most prominent:

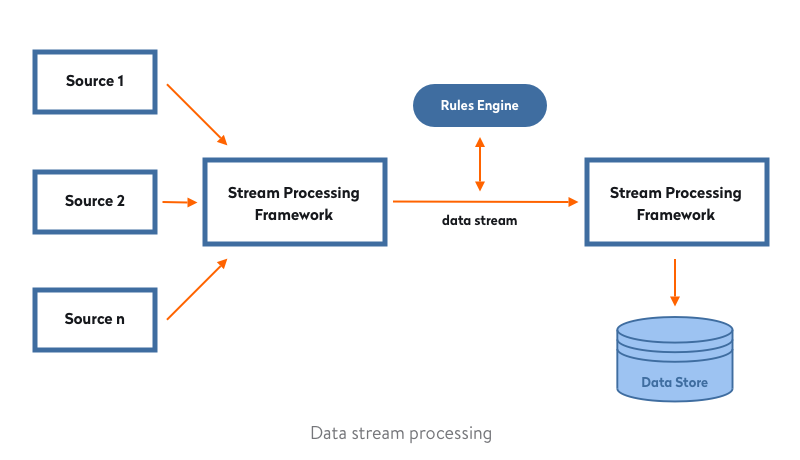

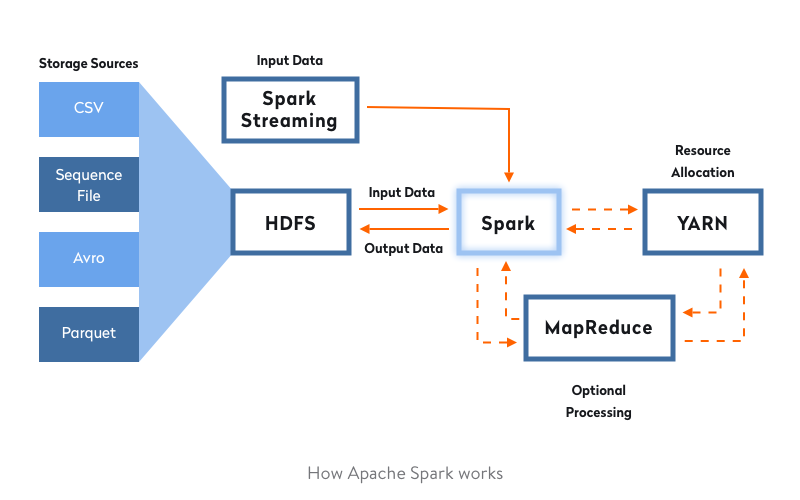

Apache Spark is an open-source stream processing platform that provides in-memory data processing, so it processes data much faster than tools that use traditional disk processing. Spark works with HDFS and other data stores including OpenStack Swift and Apache Cassandra. Spark’s distributed computing can be used to process structured, unstructured, and semi-structured data. Furthermore, it’s simple to run Spark on a single local system, which makes development and testing easier.

Apache Storm is a distributed real-time framework used for processing unbounded data streams. Storm supports any programming language and processes structured, unstructured, and semi-structured data. Its scheduler distributes the workload to nodes.

Apache Samza is a distributed stream processing framework that offers an easy-to-use callback-based API. Samza provides snapshot management and fault tolerance in a durable and scalable way.

World-recognized companies often choose these well-established solutions. For example, Netflix uses Apache Spark to provide users with recommended content. Because Spark is a one-stop-shop for working with big data, it’s increasingly used by leading companies. The image below shows the principle of how it works:

You can also opt for building a custom tool. Which way you should go depends on your answers to three how much questions:

– How much complexity do you plan to manage?

– How much do you plan to scale?

– How much reliability and fault tolerance do you need?

If you’ve managed to implement the above-mentioned process properly, you won’t lack users. So how can you get prepared for them? By ensuring…

High load capabilities and scalability

A properly developed system might attract lots of users. So make sure it will be able to withstand high loads. Even if your project is rather small, you might need to scale.

What is high load?

– High load starts when one server is unable to effectively process all of your data.

– If one instance serves 10,000 connections simultaneously, you’re dealing with a high load.

– A high load is simultaneously handling thousands or millions of users.

– If you deploy your app on Amazon Web Services, Microsoft Azure, or Google Cloud Platform, you’re automatically provided with high load architecture.

Principles of building apps capable of handling high loads

Dynamics and flexibility. When building large-scale apps, your focus should be on flexibility. With a flexible architecture, you can make changes and extensions much easier. This means lower costs, less time, and less effort. Note that flexibility is the most essential element of a fast-growing software system.

Scalability. Businesses should tailor scalability to their development purposes, meaning in accordance with the number of expected users. Make sure you carry out preliminary research to ensure your app is scalable enough to meet the expected load. The same applies to the app architecture. Keep in mind that scalable products are the foundation of successful software development.

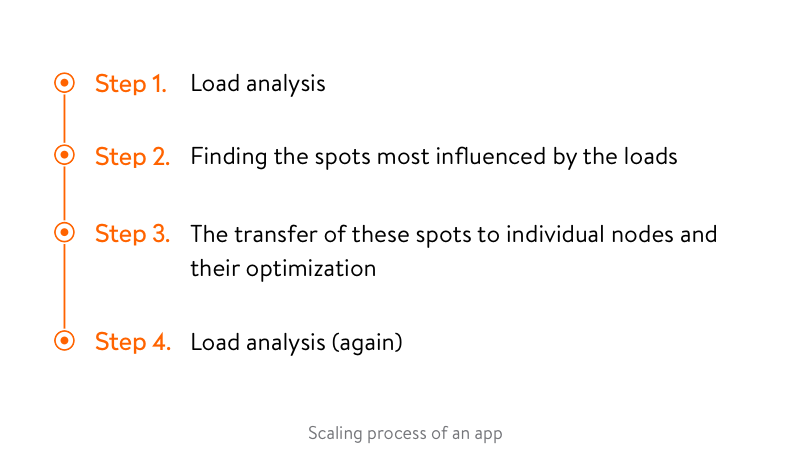

Scaling any app involves four steps:

If you’re building a new app, it isn’t necessary to immediately ensure an infrastructure capable of dealing with millions of users and processing millions of events daily. But we suggest designing the app to scale from the outset. This will help you avoid large-scale changes in the long run.

We also recommend using the cloud to host new projects, as it reduces the server cost and improves management.

In addition, lots of cloud hosting services offer private network services. These services enable developers to safely use multiple servers in the cloud and make systems scalable.

Read also: How to choose the proper web hosting service for a website

The following are some tips to create scalable apps:

Choose the right programming language. Erlang and Elixir, for example, ensure actor-based concurrency out of the box. These languages permit processes to communicate with each other even if they’re on different physical machines. As a result, developers don’t have to worry about the underlying network or the protocol used for communication.

Ensure communication between microservices. The communication channel between your services should also be scalable. To accomplish this you can apply a messaging bus, which is infrastructure that helps different systems communicate by means of a shared set of interfaces. This will allow you to build a loosely coupled system to scale your services independently.

Avoid a single point of failure. Make sure there’s no single resource that might crash your entire app. To ensure this, have several replicas of everything. We suggest running a database on multiple servers. Some databases support replication out of the box — for instance, MongoDB. Do the same with your backend code, using load balancers to run it on multiple servers.

The role of databases in handling high loads

Traditional relational databases are not tailored to benefit from horizontal scaling. A new class of databases, dubbed NoSQL databases, are capable of taking advantage of the cloud computing environment. NoSQL databases can natively manage the load by spreading data among lots of servers, making them fit for cloud computing environments.

One of the reasons why NoSQL databases can do this is that related data is stored together instead of in separate tables. Such a document data model, used in MongoDB and other NoSQL databases, makes these databases a natural fit for cloud computing environments.

To ensure the high load capability of our client’s World Cleanup Day app, we used PostgreSQL, which provides simultaneous I/O operations and handles high loads. Check out our case study for more details.

Read also: API Load Testing

As a result of the above-mentioned implementations and the use of big data technology described in this guide, you’ll be able to provide users with software for deep analytics and reporting that processes and visualizes real-time data collected from multiple sources. Yalantis is experienced in developing mobile- and web-friendly analytics solutions with the lifecycle of collecting data from several sources, building attractive charts, and displaying predictions. We’ll gladly help if you’re interested in big data consulting and developing a big data infrastructure.

Ten articles before and after

Detailed Analysis of the Top Modern Database Solutions

Measuring Code Quality: How to Do Android Code Review

Android Studio Plugin Development

How to Quickly Import Data from JSON to Core Data

Using RxSwift for Reactive Programming in Swift

Which Javascript Frameworks to Choose in 2021

Best Tools and Main Reasons to Monitor Go Application Performance

How to Deploy Amin Panel Using QOR Golang SDK: Full Guide With Code